We Can Imagine The World Of Gradient Descent As A Huge Hill And Process Of Gradient Descent When

Published:

The stochastic — means a system or a process that is linked with a random probability. Hence, in Stochastic Gradient Descent, a few…

Stochastic Gradient Descent as Driver of the AI

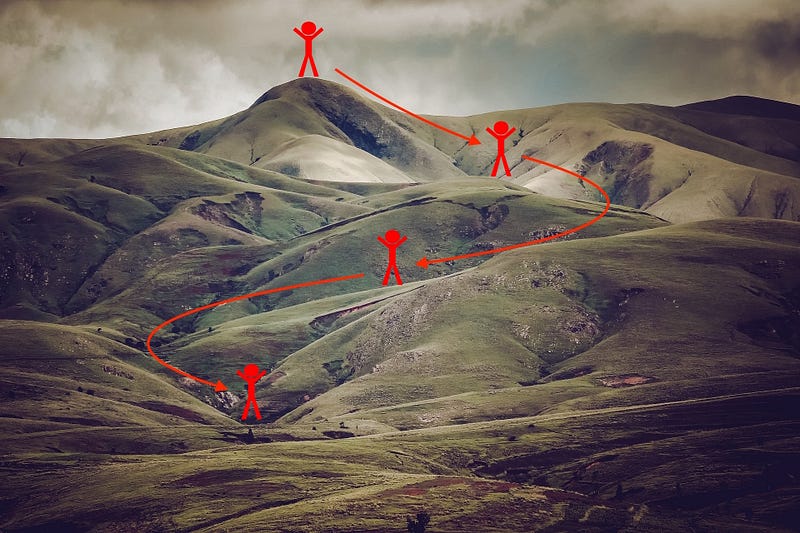

hiking to downhill == gradient descentWe can imagine the world of gradient descent as a huge hill and process of gradient descent when the human is walking downhill. Here, the human needs to find the shortest way and need to choose the excellent slope (in case of gradient descent, the step alpha) to omit to fall down and not go on the flat area. Without slope, probably man can’t reach the down part of the hill.

hiking to downhill == gradient descentWe can imagine the world of gradient descent as a huge hill and process of gradient descent when the human is walking downhill. Here, the human needs to find the shortest way and need to choose the excellent slope (in case of gradient descent, the step alpha) to omit to fall down and not go on the flat area. Without slope, probably man can’t reach the down part of the hill.

Minimizing the cost is like finding the lowest point in a hilly landscapeThe stochastic — means a system or a process that is linked with a random probability. Hence, in Stochastic Gradient Descent, a few samples are selected randomly instead of the whole data set for each iteration. In Gradient Descent, a term called “batch” denotes the total number of representatives from a dataset used to calculate each iteration gradient. In typical Gradient Descent optimization, like Batch Gradient Descent, the batch is taken to be the whole dataset. Although using the entire dataset is really useful for getting to the minima less noisily and randomly, but the problem arises when our datasets get big.

Minimizing the cost is like finding the lowest point in a hilly landscapeThe stochastic — means a system or a process that is linked with a random probability. Hence, in Stochastic Gradient Descent, a few samples are selected randomly instead of the whole data set for each iteration. In Gradient Descent, a term called “batch” denotes the total number of representatives from a dataset used to calculate each iteration gradient. In typical Gradient Descent optimization, like Batch Gradient Descent, the batch is taken to be the whole dataset. Although using the entire dataset is really useful for getting to the minima less noisily and randomly, but the problem arises when our datasets get big.

Suppose you have a million samples in your dataset. Use a typical Gradient Descent optimization technique. You will have to use all one million pieces to complete one iteration while performing the Gradient Descent. It has to be done for every iteration until the minima are reached. Hence, it becomes computationally costly to perform. This problem is solved by Stochastic Gradient Descent. In SGD, it uses only a single sample, i.e., a batch size of one, to perform each iteration. The selection is randomly shuffled and selected for performing the iteration.

Overall every process of DL is based on the GD method. Hence we can say the GD drives AI, as we know AI->ML-DL. Since because of GD, AI can classify cat and dog from the photos, can recognize handwriting, and thousands of other tasks. If at 80s problem was computing power, now we are not lucking any computing power with GPU and TPU. We can run any type of model; only what we need is data.

By Dr. Farruh on January 4, 2021.

Exported from Medium on May 25, 2024.